Spotting Deepfake Scams: How Seniors Are Learning to Outsmart AI Fraudsters

By Jane Miller | Senior Privacy News | September 12, 2025

When Rosemary, 72, received a desperate call from her “grandson,” pleading for help from a foreign jail, her heart raced—but something about the voice was just slightly off. She hung up and called her daughter, who quickly confirmed that the grandson was safe at home. What Rosemary didn’t know was that she’d just dodged one of the fastest-growing threats facing older adults in 2025: deepfake scams powered by artificial intelligence.

The New Face of Elder Fraud

AI-driven “deepfakes”—digital forgeries of voice, video, and images—have exploded in use by scammers looking to trick seniors out of money or sensitive information. These scams use tools that clone voices from just a few seconds of online recordings or craft fake videos that are nearly indistinguishable from the real thing. According to the FBI's Internet Crime Complaint Center, losses from elder fraud have soared, hitting $4.88 billion in 2024—and experts say deepfake scams are a driving force behind this spike.[1]

A recent study found that 39% of people over age 65 are susceptible to AI-generated deception, including voice and video scams—a rate much higher than among younger groups. The emotional pull of hearing a loved one’s voice, or seeing a familiar face in a video call, can override caution, especially for those less exposed to the realities of these technologies.[2][3]

How Scammers Target Seniors

Deepfake scams typically begin with information culled from social media, public databases, or even obituaries—giving criminals personal details they need to make the deception convincing. Some common tactics include:

- Grandparent scams — A cloned voice calls, urgently requesting money or sensitive info "for an emergency."

- Bank or tech support scams — Fake alerts warn of suspicious activity, followed by a deepfaked call or video from "support" staff.

- Government scams — Impersonators claim to be from Social Security or Medicare, using AI-generated voices to drive urgency and confusion.

As technology improves, these scams become harder to spot. In one focus group, 70% of older adults admitted they'd have trouble telling a real voice from a deepfake.[2]

Quick Facts

Deepfakes account for 6.5% of all fraud attacks as of 2025—a 2,137% increase since 2022.[4]

1 in 10 adults over 60 have received a deepfake voice scam call in the past year.[2]

Older victims report median financial losses of over $15,000 per scam incident.[5]

Knowledge Is Power: Training Programs Make a Difference

In response to the surge, organizations like AARP and the National Council on Aging now run digital security workshops focused on deepfake awareness. Seniors learn what red flags to watch for—unexpected requests for money or secrecy, slight voice oddities, and urgency without verification.

A new wave of “intergenerational tech training”—pairing older adults with younger family members or volunteers—has proven especially effective. Focus groups show seniors who engaged in these programs were twice as likely to spot voice and video deepfakes compared to those who had not.[6]

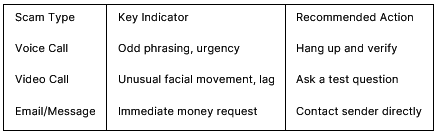

Recognizing Deepfake Red Flags

Taking Action: Senior Smart Strategies

Experts recommend that seniors always verify unexpected calls or requests by reaching out through a trusted channel. Utilizing caller ID, reverse lookups, and keeping family members regularly updated helps to build a web of protection. For financial transactions especially, a policy of “pause and confirm”—taking a moment to call or text a known relative before sending funds—can stop deepfakes from succeeding.

Key Takeaways

- AI-powered deepfake scams are an urgent and growing threat targeting seniors, with financial and emotional consequences.

- Scammers use both voice and video tools, pulling details from social media and public records for personalized attacks.

- Training programs and intergenerational support are proven to help older adults recognize and resist deepfake deception.

- Seniors should always pause, verify, and consult before sending money or sharing sensitive info in response to a call or video.

Disclaimer

This article is for informational purposes only. Seniors concerned about deepfake scams should seek support from trusted organizations and never send money without verifying requests. Not intended as legal or financial advice.

References

- AI-driven elder fraud: Deepfakes; the newest threat: https://www.abrigo.com/blog/ai-driven-elder-fraud-deepfakes-the-newest-threat/

- Deepfake Statistical Data (2023–2025): https://views4you.com/deepfake-database/

- Deepfake Attacks & AI-Generated Phishing: 2025 Statistics: https://zerothreat.ai/blog/deepfake-and-ai-phishing-statistics

- False alarm, real scam: how scammers are stealing older adults’ life savings: https://www.ftc.gov/news-events/data-visualizations/data-spotlight/2025/08/false-alarm-real-scam-how-scammers-are-stealing-older-adults-life-savings

- Understanding Deepfakes: What Older Adults Need to Know: https://www.ncoa.org/article/understanding-deepfakes-what-older-adults-need-to-know/

- Yahoo! News: Study on older adults and deepfake susceptibility: https://www.yahoo.com/tech/deeply-troubling-study-data-shows-221600178.html

- Intergenerational Support for Deepfake Scams Targeting Older Adults: https://www.usenix.org/system/files/soups2025_poster10_abstract-larubbio.pdf